yo — I’m diving deep into how LLMs actually work.

starting from absolute zero. beginner in AI/ML. this is my roadmap, my logbook, my come-back-here-and-understand-later space. also — apart from video content, i just chatgpt’d it. asked dumb questions. got slightly smarter answers. and tbh — i believe in free learning, not some rigid “roadmap” no one actually follows. we’re all just figuring it out as we go, right?

big shoutout to lossfunk & paras. we’ve never met (maybe a tweet reply once lol) but his book, his blog — they lit the fire. made me write mine, and dig deep into LLMs. impact? insane. respect, always. 🙏✨

🎯 the end goal (a.k.a. side quest)

i wanna research LLMs and make a wild discovery that gets me a nobel prize. (ok lol maybe not, but imagine the flex.) but for real — i just wanna understand how this whole thing works, and maybe build something cool of my own someday.

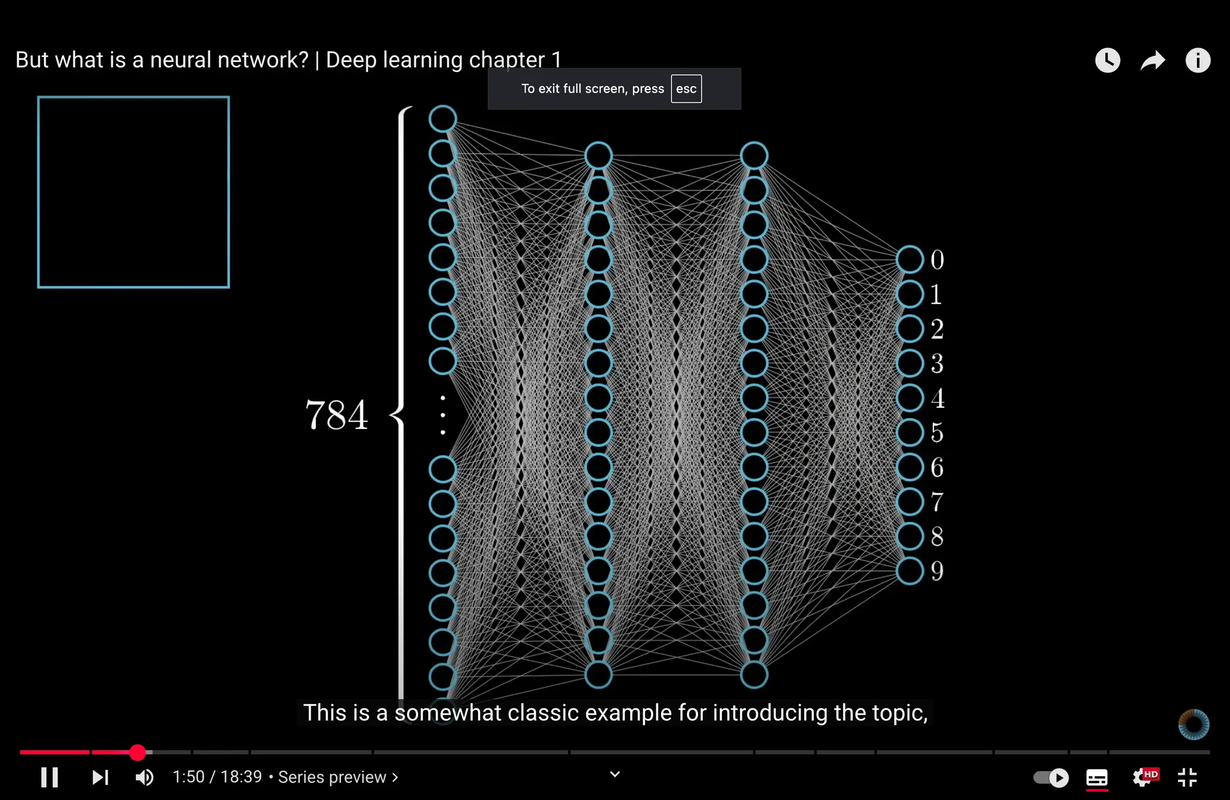

🧠 the brain parallel

just like our brain uses tiny neurons firing chemical signals, these models kinda mimic that — but with math instead of meat.

📺 where i’m starting

i’m following the 3Blue1Brown Neurons playlist. our big boi is jacked with animations and explanations. should cover the theory nicely — from baby steps to galaxy brain

3Blue1Brown will take your brain to the edge of explosion — but in the best way. he breaks things down so simply, you get it before you even realize you’re learning

🛠️ my tinker kit

i love to mess around, so i started a mini playground:

- Tinkerkit Nueron

- Teachable Machine

- Google Colab

- and of course, poked ChatGPT with dumb questions (since i had no clue how any of this works)

📄 papers i wanna vibe with

some legendary research drops i might explore:

- Attention is All You Need — the OG Transformer paper

- BERT — deep bidirectional vibes

- GPT-2 — multitasking like a champ

- GPT-3 — the one that went viral

- Scaling Laws for Neural Language Models — wild insights here

that’s it for now — just logging the first steps.

will update as i go deeper into the LLM rabbit hole 🌀